Main research focus

Our research focuses on bioacoustics and ecology while we work with a wide range of different animal taxa, such as cetaceans, bats and birds. As we are situated at Nuremberg Zoo we are also involved in a row of more applied animal welfare studies, such as animal behavior under human care and stress physiology. In a current project we aim to combine bioacoustics and applied animal welfare studies and investigate if it is possible to predict animal behavior and welfare state from bioacoustics using artificial intelligence approaches. In collaboration with engineers from CoSys-Lab Antwerp University we develop large scale microphone and hydrophone arrays, which can be used to track wild animals and better understand their interactions with their habitat or also with man-made structures e.g. wind turbines. At our manateehouse, a 700 m2 greenhouse at Nuremberg Zoo, we keep a huge colony of nectar feeding bats, which is used to interact with special feeding stations. Currently we are working on a project that tries to automatically detect individual bats at the feeding stations via their distinct wing membrane patterns.

Bat wing membrane pattern as individual markers

At our so-called manatee house, a 700 sqm greenhouse, we keep a big colony of nectar feeding bats (Glossophaga soricina). The animals are used to fly to feeding stations, which are sometimes equipped with sonar beacons (see video sequence). To identify visiting bats individually, we are developing a system that automatically recognizes wing patterns. Wing membranes of bats have a distinct pattern of blood vessels, elastin fibers and scars. Theses patterns are visible in strong IR backlight and can be captured by high speed cameras. Automatic individual recognition of bats will allow us to adjust food reward individually and train free living unrestrained bats.

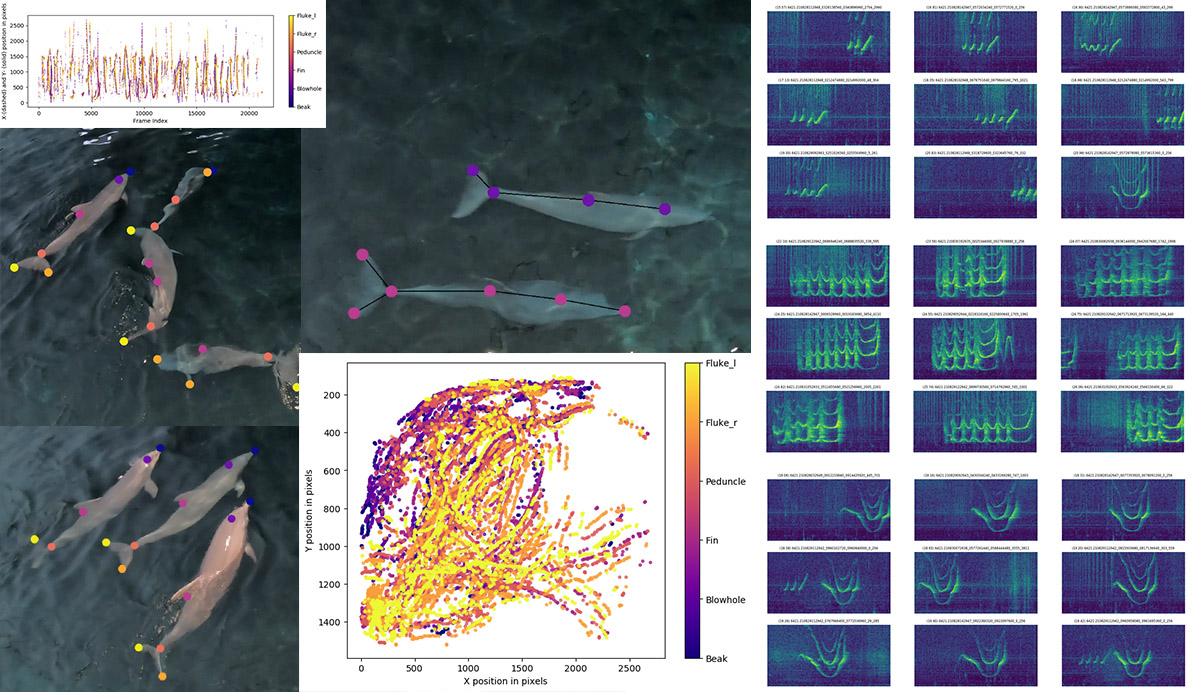

Predicting dolphin behavior from soundscape recordings

Bottlenose dolphins are highly vocal animals and certain behaviors are associated with certain vocalisations or also noise (like splashing noise). In this project we aim to predict dolphin behavior from acoustic features extracted from audio recordings. Audio recordings were made with SoundTrap hydrophones in a 7 mio. liter pool with 6 female bottlenose dolphins (Tursiops truncatus). We want to correlate the acoustic features of the soundscape, with movement data acquired from video recordings (analyzed with DeepLabCut, see image) and human observations. We hypothesize that sound features can be classified to certain acoustic events and that these events are linked to certain behaviors. Our approach will not only help to simplify and standardize the assessment of the behavior of dolphins under human care but can also be a basis for acoustic based behavioral observations of wild dolphin populations.